Nvidia RT Cores vs. AMD Ray Accelerators – Explained

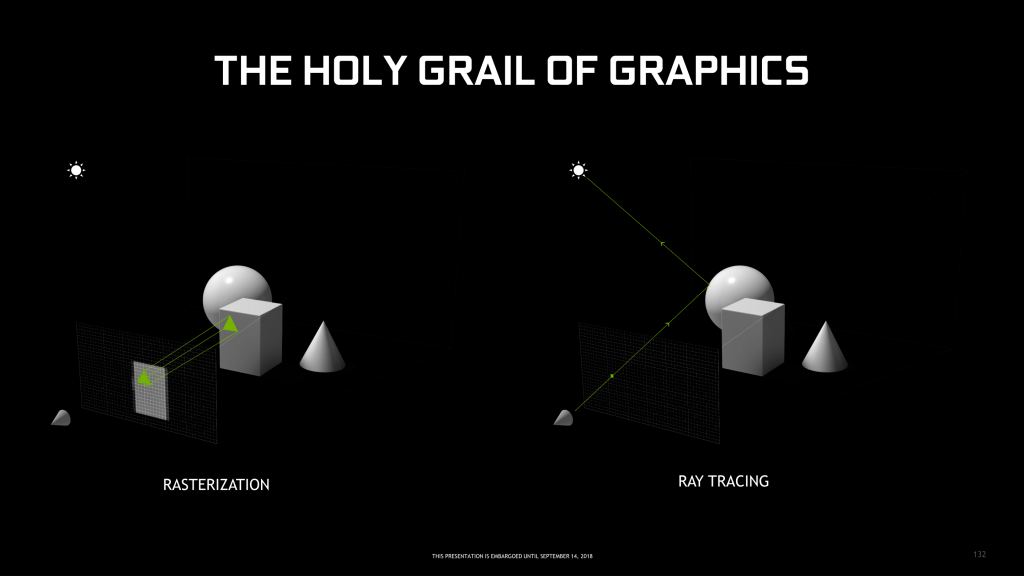

With the first generation of RTX Graphics cards in 2018, Nvidia introduced the world to a brand new feature that was supposed to change the landscape of gaming as we know it. The first generation RTX 2000 series graphics cards were based on the new Turing architecture and brought along support for real-time Ray Tracing in games. Ray Tracing had already existed in professional 3D animation and synthetic fields but Nvidia brought support for real-time rendering of games using Ray Tracing technology instead of traditional rasterization which was supposed to be game-changing. Rasterization is the traditional technique through which games are rendered while Ray Tracing uses complex calculations to accurately depict how light would interact and behave in the game environment as it would in real life. You can learn more about Ray Tracing and Rasterization in this content piece.

Back in 2018, AMD had no answer for Nvidia’s RTX series of graphics cards and their Ray Tracing functionality. The Red Team was simply not ready for Nvidia’s innovative introduction, and this did put their top offerings at a significant disadvantage as compared to Team Green. The AMD RX 5700 XT was a fantastic graphics card for the price of $399 which rivaled the performance of the $499 RTX 2070 Super. The biggest problem for AMD though was the fact that the competition offered a technology that they did not possess. This coupled with the diverse feature set, DLSS support, stable drivers and overall superior performance put the Nvidia offerings at a significant advantage when it came to the Turing vs RDNA generation.

AMD RX 6000 series with Ray Tracing

Fast forward to 2020 and AMD has finally brought the fight to Nvidia’s top offerings. Not only has AMD introduced support for Real-Time Ray Tracing in games, but they have also released 3 graphics cards that are extremely competitive to the top graphics cards from Nvidia. The AMD RX 6800, the RX 6800 XT, and the RX 6900 XT are battling head-to-head with the Nvidia RTX 3070, RTX 3080, and RTX 3090 respectively. AMD is finally competitive again on the very top end of the product stack which is promising news for consumers as well.

However, things are not entirely positive for AMD either. Although AMD has introduced support for Real-Time Ray Tracing in games, their Ray Tracing performance received a lukewarm reception from both reviewers and the general consumers. It is understandable though since this is AMD’s first attempt at Ray Tracing so it would be a little unfair to expect them to deliver the best Ray Tracing performance out there in their first attempt. However, it raises questions about the way that AMD’s Ray Tracing implementation works when compared to Nvidia’s implementation that we saw with the Turing and now the Ampere architecture.

Nvidia’s suite of RTX Technologies

The main reason why AMD’s attempt seems to be underwhelming as compared to Nvidia’s is that AMD was essentially playing catch-up with Nvidia and had more or less only 2 years of time to develop and perfect their implementation of Ray Tracing. Nvidia on the other hand has been developing this technology for far longer as they had nobody to compete against at the very top of the product stack. Nvidia not only delivered Ray Tracing support before AMD, but it also had a better support ecosystem built around the technology as well.

Nvidia designed its RTX 2000 series of graphics cards with Ray Tracing as the primary focus. This is evident throughout the design of the Turing architecture itself. Not only did Nvidia multiply the number of CUDA Cores, but they also added specific dedicated Ray Tracing cores known as “RT Cores” which handle the bulk of the computations that are required for Ray Tracing. Nvidia also developed a technology known as “Deep Learning Super Sampling or DLSS” which is a fantastic technology that uses deep learning and AI to perform upscaling and rebuilding tasks and also compensate for the performance loss of Ray Tracing. Nvidia also introduced dedicated “Tensor Cores” in GeForce series cards which are designed to help in Deep Learning and AI Tasks such as DLSS. In addition to that, Nvidia also worked with game studios to optimize the upcoming Ray Tracing games for the dedicated Nvidia hardware so that performance can be maximized.

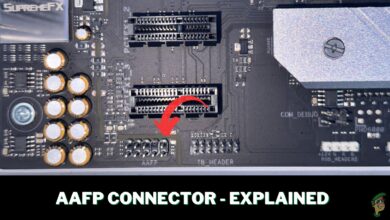

Nvidia’s RT Cores

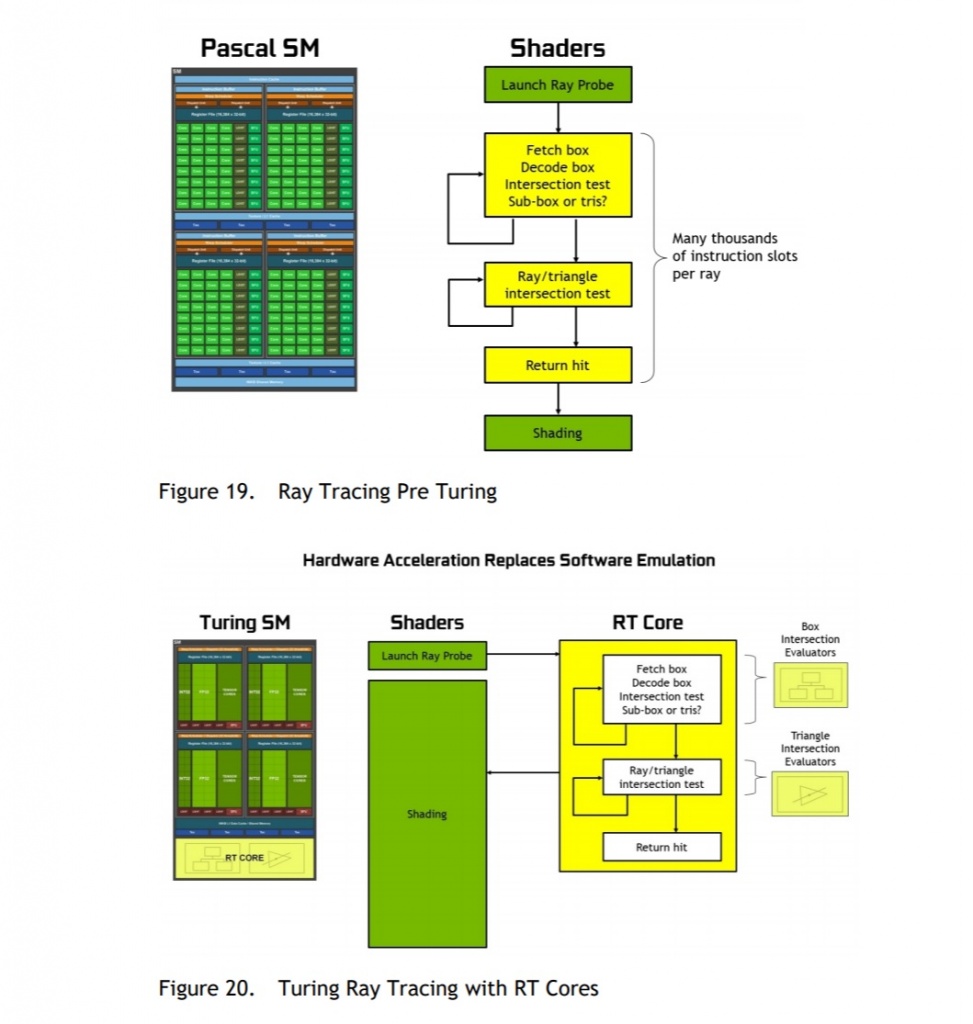

RT or Ray Tracing Cores are Nvidia’s dedicated hardware cores that are specifically designed to handle the computational workload that is associated with Real-Time Ray Tracing in games. Having specialized cores for Ray Tracing offloads a lot of workload from the CUDA Cores that are dedicated to standard rendering in games so that performance is not affected too much by the saturation of core utilization. RT Cores sacrifice versatility and implement hardware with a special architecture for special calculations or algorithms to achieve faster speeds.

The more common Ray Tracing acceleration algorithms that are commonly known are BVH and Ray Packet Tracing and the schematic diagram of Turing architecture also mentions BVH (Bounding Volume Hierarchy) Transversal. The RT Core is designed to identify and accelerate the commands that pertain to Ray Traced rendering in games.

According to Nvidia’s former Senior GPU Architect Yubo Zhang:

“[Translated] The RT core essentially adds a dedicated pipeline (ASIC) to the SM to calculate the ray and triangle intersection. It can access the BVH and configure some L0 buffers to reduce the delay of BVH and triangle data access. The request is made by SM. The instruction is issued, and the result is returned to the SM’s local register. The interleaved instruction and other arithmetic or memory IO instructions can be concurrent. Because it is an ASIC-specific circuit logic, performance/mm2 can be increased by an order of magnitude compared to the use of shader code for intersection calculation. Although I have left the NV, I was involved in the design of the Turing architecture. I was responsible for variable rate coloring. I am excited to see the release now.”

Nvidia also states in the Turing Architecture White Paper that RT Cores work together with advanced denoising filtering, a highly-efficient BVH acceleration structure developed by NVIDIA Research, and RTX compatible APIs to achieve real-time ray tracing on a single Turing GPU. RT Cores traverse the BVH autonomously, and by accelerating traversal and ray/triangle intersection tests, they offload the SM, allowing it to handle another vertex, pixel, and compute shading work. Functions such as BVH building and refitting are handled by the driver, and ray generation and shading are managed by the application through new types of shaders. This frees the SM units to do other graphical and computational work.

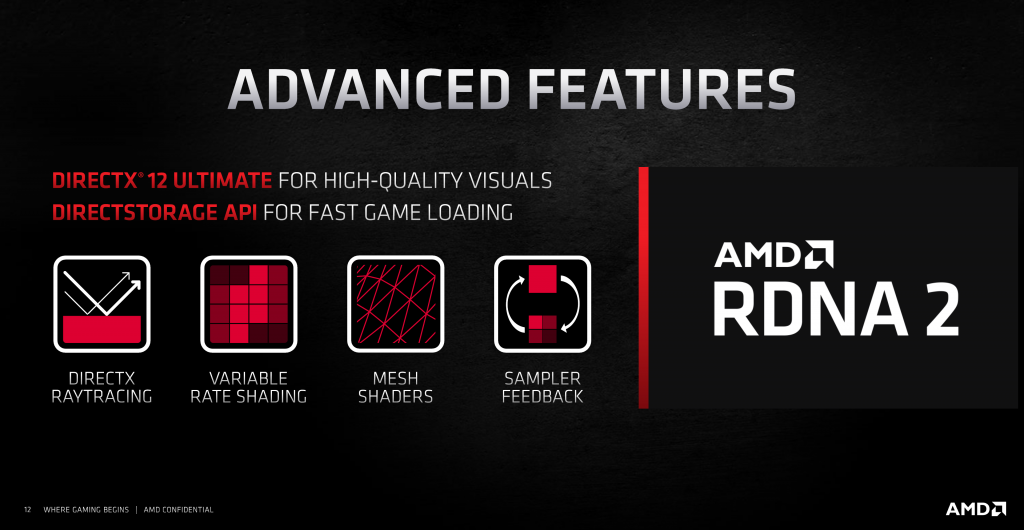

AMD’s Ray Accelerators

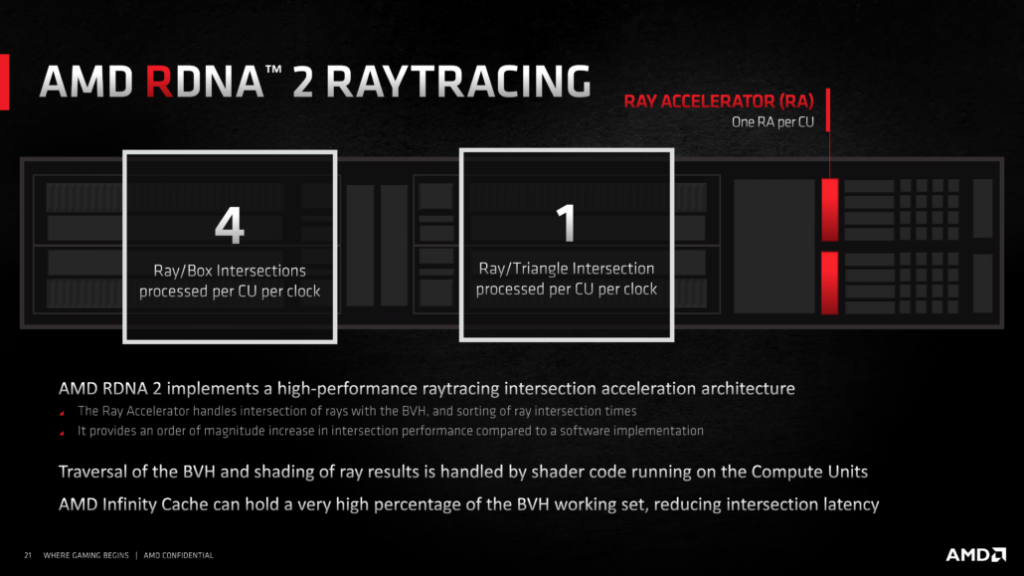

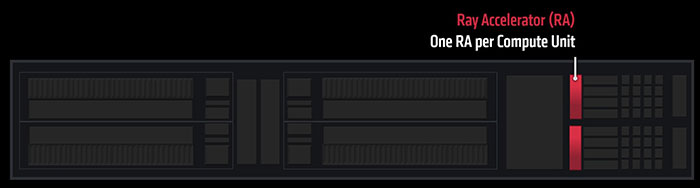

AMD has entered the Ray Tracing race with their RX 6000 series and with that, they have also introduced a few key elements to the RDNA 2 architectural design that help with this feature. To improve the Ray Tracing performance of AMD’s RDNA 2 GPUs, AMD has incorporated a Ray Accelerator component into its core Compute Unit Design. These Ray Accelerators are supposed to increase the efficiency of the standard Compute Units in the computational workloads related to Ray Tracing.

The mechanism behind the functioning of Ray Accelerators is still relatively vague however AMD has provided some insight into how these elements of supposed to work. According to AMD, these Ray Accelerators have an expressed purpose of traversing the Bounded Volume Hierarchy (BVH) structure and efficiently determining intersections between rays and boxes (and eventually triangles). The design fully supports DirectX Ray Tracing (Microsoft’s DXR) which is the industry standard for PC Gaming. In addition to that, AMD utilizes a Compute-based denoiser to clean up the specular effects of ray-traced scenes rather than relying on purpose-built hardware. This will probably put extra pressure on the mixed-precision capabilities of the new Compute Units.

Ray Accelerators are also capable of processing four bounded volume box intersections or one triangle intersection per clock, which is much faster than rendering a Ray Traced scene without dedicated hardware. There is a big advantage to AMD’s approach which is that RDNA 2’s RT Accelerators can interact with the card’s Infinity Cache. It is possible to store a large number of Bounded Volume Structures simultaneously in the cache, so some load can be taken off data management and memory read cells.

Key Difference

The biggest difference that is immediately obvious while comparing the RT Cores and the Ray Accelerators is that while both of them perform their functions fairly similarly, the RT Cores are dedicated separate hardware cores that have a singular function, while the Ray Accelerators are a part of the standard Compute Unit structure in the RDNA 2 architecture. Not only that, Nvidia’s RT Cores are on their second generation with Ampere with a lot of technical and architectural improvements under the hood. This makes Nvidia’s RT Core implementation a much more efficient and powerful Ray Tracing method than AMD’s implementation with the Ray Accelerators.

Since there is a single Ray Accelerator built into every Compute Unit, the AMD RX 6900 XT gets 80 Ray Accelerators, the 6800 XT gets 72 Ray Accelerators and the RX 6800 gets 60 Ray Accelerators. These numbers are not directly comparable to Nvidia’s RT Core numbers since those are dedicated cores built with a single function in mind. The RTX 3090 gets 82 2nd Gen RT cores, the RTX 3080 gets 60 2nd Gen RT Cores and the RTX 3070 gets 46 2nd Gen RT Cores. Nvidia also has separate Tensor Cores in all of these cards which help in machine learning and AI applications like DLSS, which you can learn more about in this article.

Future Optimization

It’s hard to say at this juncture what the future holds in Ray Tracing for Nvidia and AMD, but one can make a few educated guesses by analyzing the current situation. As of the time of writing, Nvidia holds a pretty significant lead in Ray Tracing performance when compared directly to AMD’s offerings. While AMD has made an impressive start for RT, they’re still 2 years behind Nvidia in terms of research, development, support, and optimization. Nvidia has locked in most Ray Tracing titles right now in 2020 to use Nvidia’s dedicated hardware better than what AMD has put together. This, combined with the fact that Nvidia’s RT Cores are more mature and more powerful than AMD’s Ray Accelerators, put AMD at a disadvantage when it comes to the current Ray Tracing situation.

However, AMD is definitely not stopping here. AMD has already announced that they are working on an AMD alternative to DLSS which is a massive help in improving Ray Tracing performance. AMD is also working with game studios to optimize upcoming games for their hardware, which shows in titles like GodFall and Dirt 5 where AMD’s RX 6000 series cards perform surprisingly well. Therefore we can expect AMD’s Ray Tracing support to get better and better with upcoming titles and the development of upcoming technologies like the DLSS Alternative.

With that said, as of the time of writing Nvidia’s RTX Suite is just too powerful to ignore for anyone looking for serious Ray Tracing performance. Our standard recommendation will be the new RTX 3000 series of graphics cards from Nvidia over AMD’s RX 6000 series for anyone who considers Ray Tracing an important factor in the purchase decision. This might and should change with AMD’s future offerings, as well as improvements in both drivers and game optimization as time goes on.

Final Words

AMD has finally jumped on the Ray Tracing scene with the introduction of their RX 6000 series of graphics cards based on the RDNA 2 architecture. While they do not beat Nvidia’s RTX 3000 series cards in direct Ray Tracing benchmarks, the AMD offerings do provide extremely competitive rasterization performance and impressive value which may appeal to gamers who do not care about Ray Tracing as much. However, AMD is well on its path to improve Ray Tracing performance with several key steps in quick succession.

The approach taken by Nvidia and AMD for Ray Tracing is rather similar but both companies use different hardware techniques to do so. Initial testing has shown that Nvidia’s dedicated RT Cores outperform AMD’s Ray Accelerators that are built into the Compute Units themselves. This might not be much of a concern to the end-user, but is an important thing to consider for the future since game developers are now faced with a decision to optimize their RT features for one of either approach.