1080p vs. 1080i: Understanding The Differences Between Video Resolutions

Are they both really the same?

There used to be a time when movies didn’t have 4K releases because that technology wasn’t around yet. Now, we’ve got several resolutions for videos such as 720p, 1080i , 1080p, 2K, 4K, etc., existing simultaneously.

Some of the cutting-edge finer qualities are indistinguishable from one another for many; only those who work in a video-related field can discern between them. Today, we’ll demystify the two resolutions that are both classified as HD. Let’s learn what differentiates 1080p with 1080i.

| 1080p | 1080i |

| Progressive scan | Interlaced scan |

| Better quality, less motion blur | Inferior quality with more motion artifacts |

| Requires more bandwitdh | Requires less bandwidth |

Are They Both HD?

HD (high-definition) refers to resolutions denoted as 1920×1080 pixels, which means that there are 1920 columns and 1080 rows, all comprised of pixels. Hence, both 1080p and 1080i are indeed HD resolutions. The main difference, however, lies in the suffixes at the end of their names.

How do They Work?

The ‘i‘ in 1080i stands for ‘interlaced‘ while the ‘p‘ in 1080p stands for ‘progressive‘. To understand how they function differently, presume that your monitor/TV screen is divided into 1080 rows, with each row consisting of 1920 pixels.

You should also know about the refresh rate of a screen. The refresh rate of a screen is the rate at which the pixels are refreshed. These days, most monitors and TVs come with refresh rate of 60Hz, which means that the screen is refreshed 60 times in a single second… or is it?

How an image is seen on your screen actually depends on how it’s scanned. That’s what progressive and interlaced mean. Both are different approaches to scanning, and subsequently, displaying an image. Depending on the resolution and modernity of your tech/content, you could be consuming either.

Interlaced Scan

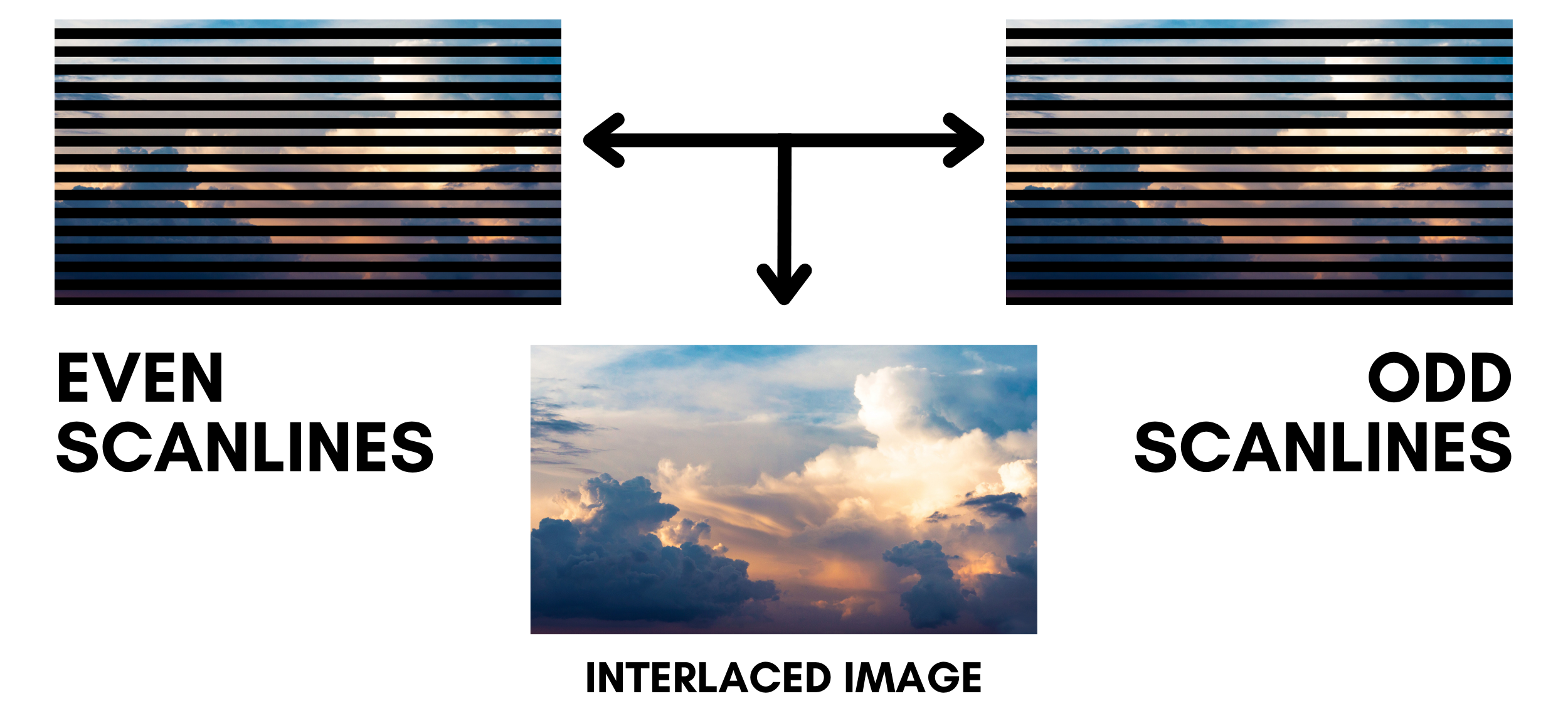

The interlaced scan operates by refreshing every alternate row of pixels on the screen. This means that one frame displays half of the image and the following displays the other half. So, technically the frame-rate of the content is half of what the refresh rate is, since every frame is only half a frame.

Because this mechanism occurs so rapidly, our eyes are unable to detect this flickering and this allows them to see a moving picture without any flaws.

The main disadvantage of the interlaced scan is its bad output quality during motion-heavy sequences; fight scenes, rapid camera flicks, etc. Because the interlaced scan only refreshes every alternate row on the screen, this results in a great loss in image quality. Consequently, the video becomes very blurry and obscure.

Progressive Scan

The progressive scan is different, and ultimately, better, than the interlaced scan. The progressive scan refreshes every pixel on the screen, from top to bottom, according to the refresh rate. Every row of pixels is scanned fully one by one, hence the name “progressive”, and displayed accordingly. In this way, there is essentially no flickering at all.

What allows the progressive scan to be a cut above the interlaced scan, is the ability to produce superior image quality during motion-heavy sequences. Naturally, the quality will suffer from some degree of motion blur, but nevertheless, it will be indistinguishable and clear enough to understand.

1080p and 1080i Today

For an extensive period, the 1080p format was not used in streaming, primarily as it consumed a lot of bandwidth. Because the screen refreshes all at once, and does so at very fast rate (60 times in a second), this required a lot of processing power, hence the high bandwidth usage.

Incidentally, most of the TV screens and monitors, at that time, came with a refresh rate of 50Hz (50 times in a second), which is more suited for 1080i. Also, the Internet speed was not so fast as it is today, therefore, TV programs were aired in 1080i.

Even today, you can see programs being aired in 1080i. This is largely because of the fact that nowadays, more than 500 channels exist on single cable network. A huge amount of data needs to be processed and transferred to your TV.

Unless you’re using some kind of premium cable or satellite connection to watch TV, the only way the network can process this data is to compress it slightly. This results in 1080p being converted to 1080i, so that the data can travel at a faster rate. That’s why IPTV is so popular these days, since it is more in-line with modern streaming than traditional broadcasting.

Which is Better?

The reasons listed above support the following conclusion; 1080p is definitely better than 1080i. Many movies, TV shows and sometimes, even news coverages, are motion-heavy. These scenes will obviously have inferior video quality, but they should not be so indecipherable such that the viewer is unable to understand what exactly is happening. Therefore, 1080p has the clear advantage.

The old 50Hz displays were suitable for 1080i; the slower refresh rate already made motion-heavy scenes blurry, thus allowing 1080i to get away with it. However, on modern 60Hz and higher displays, this flaw is quite noticable and proves how much finer 1080p actually is.

So, What About 4K?

4K is the new resolution that boasts exceptional quality, compared to 1080p and 2K. It is commonly denoted as 3840×2160; 3840 columns and 2160 rows entirely comprised of pixels. Because of the huge amount of pixels on the screen, when the screen refreshes, it allows a drastic improvement in image quality in terms of clarity, sharpness, colour vibrance, etc. It is described as the ‘ultra-high definition’.

The excuse for its limited use is the same as the one mentioned in the case of 1080i over 1080p; the sheer amount of processing power and bandwidth it requires. Therefore, it is used in more of an offline and stored format; Blu-Ray discs for movies, etc. It is a common belief that, in a few years’ time we will be able to stream and watch 4K on our cable or satellite connections with ease.

Conclusion

1080p has been around for a while and has, undoubtedly, replaced 1080i. But the era of 1080p will soon end; it’s successor 4K has already joined the battlefield. Even 4K’s successor is here; 8K. But that is a matter for another time. For now, we hope that you’ve learned how to distinguish between what is the true HD experience and what isn’t.

On top of the video resolution, everything you see online is compressed using a codec. Check out our article on H.264 vs H.265 to learn the difference between the two most common video codecs.

Reviewed by

Reviewed by