Throwback To the Galaxy S4 and Why Air Gesture Remains a Gimmick Without Solid Software Support

Today, we see many improvements and advancements in the world of Mobile Phones. Perhaps, when compared to those from back in the early 2010s, these are leaps ahead. This is not to say that the companies that manufacture these devices started adopting some alien tech. No, It was because of various development cycles that we see the products that we do today.

We see so many features that have evolved from their original idea. Take Quick Charge, for example. The idea of fast charging was properly introduced back in 2013 with Quick Charge 1.0. The technology has evolved to the latest Quick Charge 4+ now (or its other renditions by companies) to allow phones to juice up to 50% in about 30 minutes or less. Mind you; the average battery size is around 2800-3000 mAh today. While many features introduced back in the day have made their way to devices now, some sadly could not make the cut. One such example is Motion gestures.

Going back a couple of decades, the world’s idea of the future was controlling computers and machines with gestures. This could be seen in films such as Star Wars and Star Trek. Fast-forwarding to 2010 and Xbox made the dream come true with its Kinect. Two years later, the concept was brought to cellphones with Samsung.

Samsung Galaxy S4: An Introduction to Motion/Air Gestures on Mobile Phones

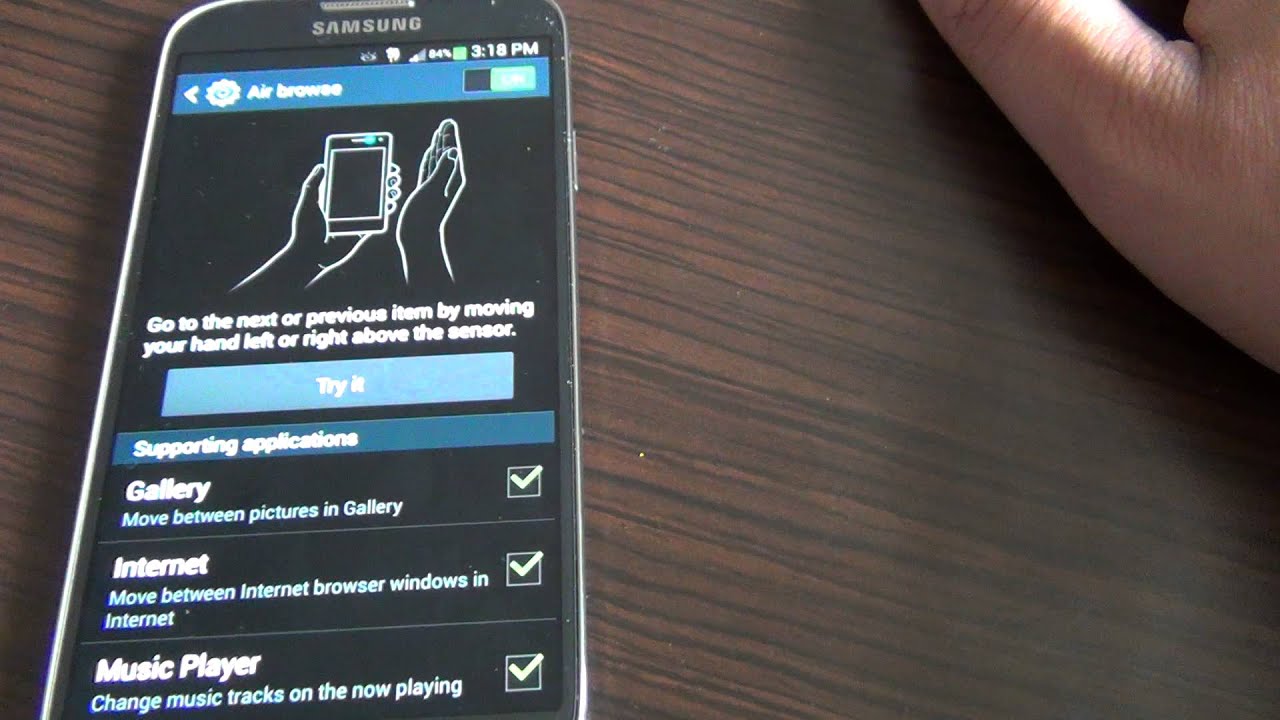

Samsung introduced its Galaxy Note 3 with a plethora of features packed in a sleek and futuristic body. The smartphone had a “huge” 5.7-inch display, rocked the latest chipset and boasted features like none other. Among these features was Samsung’s version of Motion gestures, called Air Gestures. The company had put in some smart features which allowed users to interact with their devices without having to touch the screen at all.

Samsung had installed a sensor near the Samsung logo, which allowed it to recognize hand movements and then command the phone accordingly. The features included

- Quick Glance: users could move their palm above the sensor (with the phone resting on a surface) to view notifications

- Air Jump: users could move their hands up and down in the native email or browser app to move the screen accordingly

- Air Call Accept: users could use hand gestures from left to right to accept and or reject calls

There were more features as well, which functioned along the same lines. While these features were quite innovative, their real-world applications were rather limited. Apart from native app application, there wasn’t much use of these features. From the users point-of-view, the concept wasn’t much more than a gimmick. They would show it off to their friends, once or twice, and that was it. Perhaps this was just one of those features that developers really couldn’t find a use for in their apps to integrate them.

Although a couple of months after, Samsung did forward these features onto the Galaxy S4, but that was it. After the S4, perhaps user response dictated that Air Gesture wasn’t something people really wanted, and the company could go without adding an additional sensor to their devices. Just like that, an innovative feature found its untimely death.

Air Gestures Today

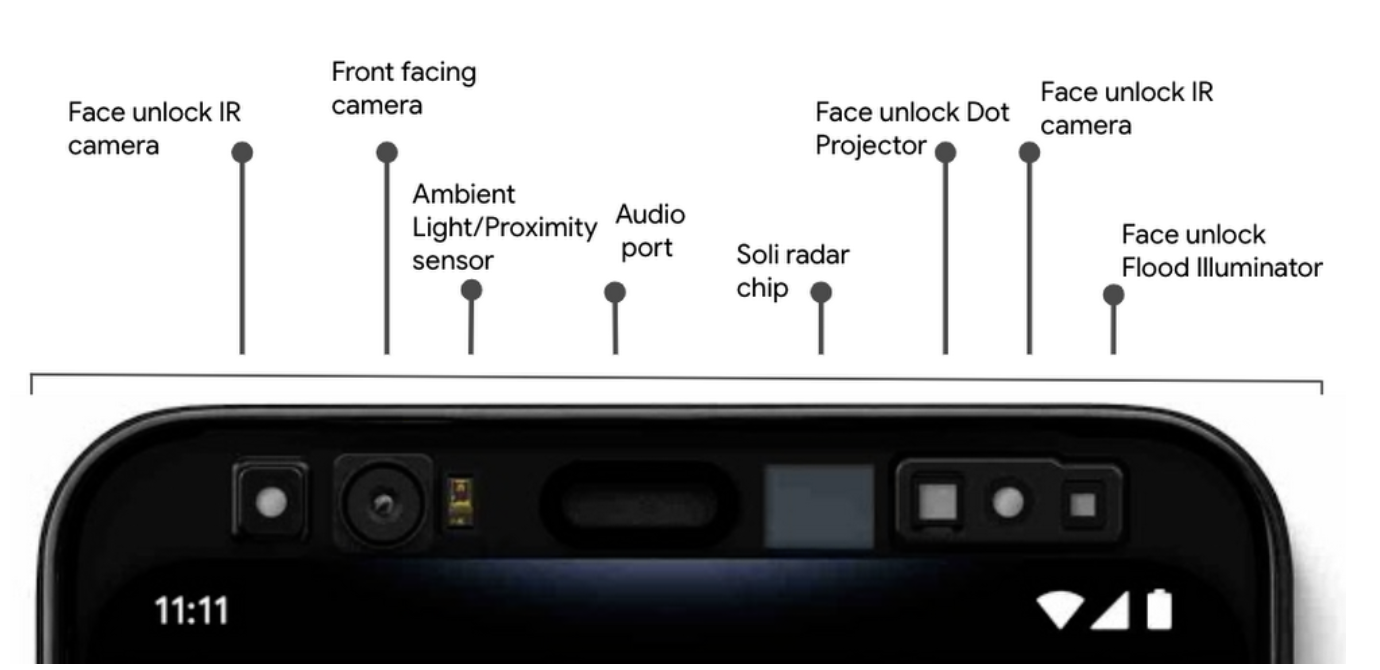

While the feature did die out, today we do get to see its implementation in some devices. Namely, the LG G8 ThinQ and the latest Google Pixel 4 lineup.

Talking about LG first, the company introduced the feature by the name of Air Motion. Primarily it provided the same functionality as the one by Samsung. The user may hover his/her hand over the camera sensor and have the option to either switch between apps or to control their music with the phone resting on a surface. While, again, the idea sounds appealing, the implementation is quite weird. The phone uses its “Z Camera” to digitally map the user’s hand gestures and turn them into inputs. The user has to make a weird claw-like gesture with his/her hand to get it to work.

Moving over to the Google side of things and earlier this past week, Google announced its flagship devices for 2019-20. These were the Pixel 4 and Pixel 4 XL. While the phones did feature new ideas (not to mention the dual-camera setup), they featured a new “Motion Sense” feature. This is Google’s way of implementing Air Motion, and while it is easier to work than LG’s option, it gives the same level of control. As mentioned above, the phone allows users to dismiss alarms and notifications with a swipe of the hand and even allows them to control their music. One thing that goes into Google’s favour, though the fact is that Google’s implementation is smarter. Not only does the sensor use itself to prepare itself to face unlock as the user picks up their device, it learns, making it faster over time. Google also included a demonstration of the feature with a Pokémon App on stage, which does give us a hint of expansion for the feature to other apps. In any case, Google’s implementation trumps Samsung’s and LG’s by far.

Does Air Gesture Have A Future? Concluding Thoughts

After discussing the timeline for Air Gesture, the question above to arise. Where does one see Air Gesture in the future? In my opinion, there are two aspects to this question. Firstly, we need to focus on what and how a company implements the feature. Secondly, are other substitutes available which trump it completely.

Talking about the first aspect, we can see above that Google’s implementation of the feature was far better than those in the past. Yes, technological advancement does play a roll in it but so does Google’s integration and AI. Making a feature available and work is one thing but making it smart is when the feature is actually worth something. Google has done just that. While not anything major, the reduction in the delay between face unlock is an excellent example of this point. One may even argue though, that the accelerometer and gyroscope of the device may be integrated to give a similar experience. Perhaps that is true, and it is also the point that lets us transition to the second aspect of the question.

We see that there are a bunch of ways to interact with our mobile phones besides having to hold them in our hands. Voice commands are a good example of this point. Not only do they allow the user to have their notifications read to them, but these also allow music controls and even sending text messages or calling someone without having to pull out your phone. These features are also implemented in smartwatches and we see them being used to work around a smartphone ever so easily. Between these options, Air Gestures don’t really prove much of a point.

To conclude from the arguments drawn above, yes, Air Gesture is a feature that does open many doors for interesting applications. Google has shown us that. But, at the same time, we see a lot of people already comfortable with other modes of interacting their devices with voice assistants and other alternatives. Not to mention, Air Gestures continue to be glitchy and require accuracy which takes the practicality out of the equation. We see this quite clearly with LG’s implementation in its LG G8 ThinQ. Perhaps we will see, with time, how Google evolves the feature in its new smartphones. There is also the hanging issue of the strain this extra, always active sensor puts on the battery life. As seen in many reviews online and a couple of hands-on impressions, the Google Pixel 4 isn’t much a champ in the battery department. Due to the new 90Hz display (if it is always active), the device averages around 4 hours of screen on time. Perhaps if it wasn’t for this Motion Sense sensor, the device could have had better battery life.

If this idea, being restricted to a couple of gestures for alarm snoozing and switching between tracks, continues to stagnate like in previous iterations, such as those in Samsung, it would become quite clear that Air Gesture is a dying feature that never promised practicality and was nothing more than just a gimmick or a party trick.