CPU Ready: The Silent Hypervisor Killer

CPU Ready is something that you may not be familiar with. At a first impression, it may sound like a good thing but unfortunately it is not. CPU Ready has been plaguing virtual environments for longer than we knew what it was. VMware defines this as the “Percentage of time that the virtual machine was ready, but could not get scheduled to run on the physical CPU. CPU Ready time is dependent on the number of virtual machines on the host and their CPU loads.” Hyper-V only recently started providing this counter (Hyper-V Hypervisor Virtual processor\CPU Wait time per dispatch) and other hypervisors may still not provide this metric.

In order to understand what CPU Ready is, we will need to understand how hypervisors schedule virtual CPUs (vCPU) to physical CPUs (pCPU). When vCPU time is needed in a VM, it’s vCPU(s) need to be scheduled against pCPU(s) so that the commands/processes/threads can run against the pCPU. In an ideal world, there are no resource conflicts or bottlenecks when this needs to happen. When a single vCPU VM needs to schedule time against a pCPU, a pCPU core is available and the CPU Ready is very minimal in this ideal world. It is important to note that CPU Ready always exists but in an ideal world it is very minimal and not noticed.

In the real world, one of the benefits to virtualization is that you can bet that many of your VMs will not spike all of their vCPUs at the same time and if they are very low usage VMs you may even make guesses on how much you can load up your physical host based on CPU usage and RAM usage. In the past, recommendations to have a 4 vCPU to 1 pCPU or even 10:1 ratio depending on workload were made. For example, you may have a single quad core processor but have a 4 VMs with vCPUs each to give you 16 vCPUs to 4 pCPUs or 4:1. What engineers were starting to see though is that the environments were just terribly slow and they could not figure out why. RAM usage seemed fine, CPU usage on the physical hosts may even be very low, under 20%. Storage latency was extremely low, yet the VMs were extremely sluggish.

What was happening in this scenario was CPU Ready. There was a queue building up of the vCPU ready to be scheduled but no pCPU available to schedule against. The hypervisor would stall the scheduling and cause latency for the guest VM. It is a silent killer that up until recent years, there were not many tools to detect. In a Windows VM, it would take forever to boot and then when it finally does, when you click on the start menu, it would take forever to show up. You may even click it again thinking it didn’t accept your first click and when it finally catches up, you’ll get a double click. On linux, your VM may boot up into read only mode or even switch the filesystems to read only mode at some point later.

So how do we combat CPU Ready? There are a few ways that can help. First is monitoring CPU Ready metrics. In VMware, it is not recommended to go above 10% but in personal experience, users start noticing above 5-7% depending on the type of VM and what it is running.

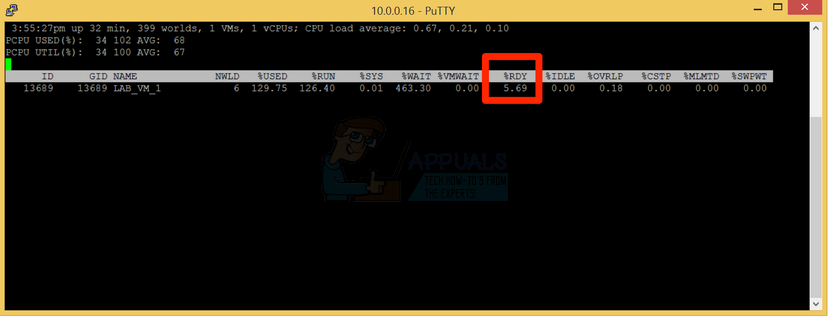

Below I will use some examples from VMware ESXi 5.5 to show CPU Ready. Using the command line, run “esxtop”. Press “c” for CPU view and you should see a column “%RDY” for CPU Ready. You can press capital “V” for VM Only view.

Here you can see that %RDY is somewhat high for a fairly unused environment. In this case, my ESXi 5.5 is running a test VM on top of VMware Fusion (Mac hypervisor) so it is expected to be a bit on the high end since we are running a VM on a hypervisor on top of another hypervisor.

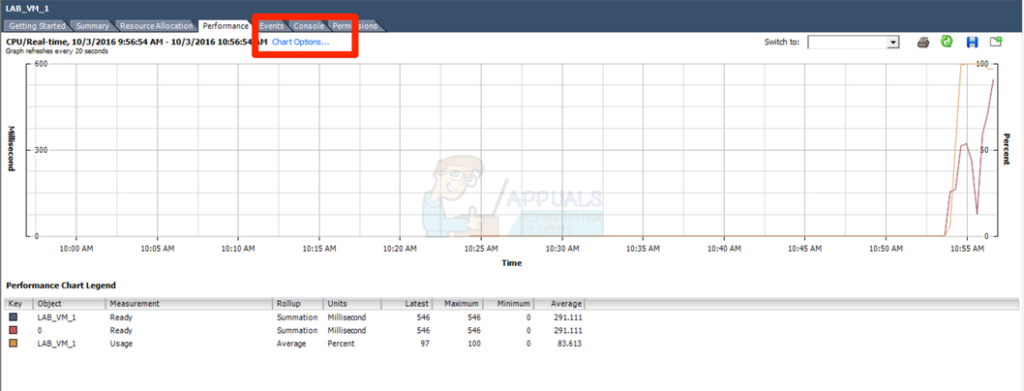

In the vSphere client, you can pull up the specific VM and click on the Performance tab. From there click on the “Chart Options”

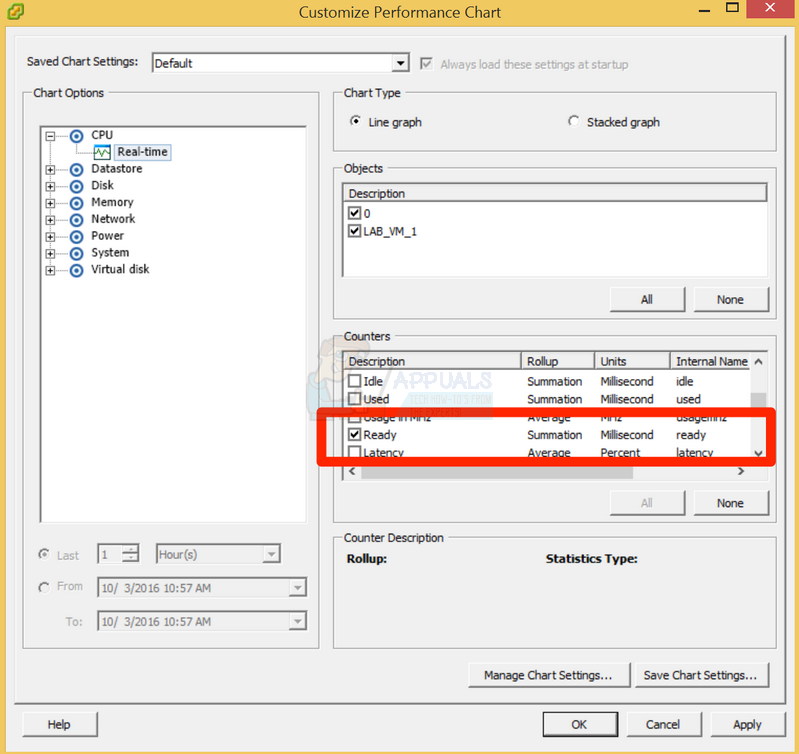

Within Chart Options, select CPU, Real-time (if you have vCenter you may have other timing options than real-time). From there in the Counters, select “Ready”. You may need to unselect a different counter as the view only allows two data types at any given time.

You will note that this value is a summarization of ready versus a percentage. Here is a link to a VMware KB article on how to convert the summarized metrics to a percentage. – https://kb.vmware.com/s/article/2002181

When purchasing hardware, more cores helps lessen the impact of CPU Ready. Hyperthreading helps as well. While Hyperthreading does not provide a full second core for each primary core, it is usually enough to allow scheduling the vCPU to pCPU and help mitigate the issue. Although hypervisors are starting to move away from vCPU to pCPU ratio recommendation, you can usually do well on a moderately used environment with a 4:1 and go from there. As you start loading VMs look at CPU latency, CPU Ready and overall feel and performance. If you have some heavy hitting VMs, you may want to segregate them onto other clusters and use a lower ratio and keep them light. On the other hand for VMs where performance is not key and it is ok for them to run sluggish you can over subscribe much higher.

Sizing the VMs appropriately is also a huge tool to combat CPU Ready. Many vendors recommend specifications well over what the VM may actually need. Traditionally more CPUs and more cores = more power. The problem in a virtual environment is that the hypervisor has to schedule all of the vCPUs to pCPUs at roughly the same time and locking the pCPUs can be problematic. If you have an 8 vCPU VM, you have to lock 8 pCPUs to allow them to schedule at the same time. If your vCPU VM only uses 10% of total vCPUs at any given time, you are better off bringing the vCPU count down to 2 or 4. It is better to run a VM at 50-80% CPU with less vCPUs than 10% at more vCPUs. This issue is in part because the operating system CPU scheduler is designed to use as many cores as possible whereas if it were trained to max out cores before using more, it may be less of an issue. An oversized VM may perform well but may be a “noisy neighbor” for other VMs so it is usually a process where you have to go through all VMs in the cluster to “right size” them in order to see some performance gains.

Many times you have run into CPU Ready and it is difficult to start right sizing VMs or upgrading to processors with more cores. If you are in this situation, adding more hosts in your cluster can assist with this to spread the load across more hosts. If you have hosts with more cores/processors than others, pegging high vCPU VMs to these higher core hosts can help as well. You want to ensure your physical host as has at least the same number of cores if not more than the VM, otherwise it will be very slow/difficult to schedule the excess of vCPU to pCPU since they need to be locked at roughly the same time.

Finally, your hypervisor may support reservations and limits on the VM. Sometimes theses get set accidentally. Aggressive settings on these can cause CPU ready when in fact the underlying resources are available for it. It is usually best to use reservations and limits sparingly and only when absolutely needed. For the most part, a properly sized cluster will appropriately balance resources and these are typically not needed.

In summary, the best defense against CPU Ready is knowing that it exists and how to check for it. You can then systematically determine the best mitigation steps for your environment given the above. For the most part, the information in this article applies universally to any hypervisor, although the screenshots and charts apply specifically to VMware.