AMD CEO Hints at Potential MI400 Days After Leaked MI300C AI Accelerator

The Instinct MI300 series, which is based on both the CDNA3 and Zen4 architectures, was recently introduced by AMD. The MI300X is unique among the series because it is a GPU-only accelerator with 192GB of HBM3 memory. This model was developed with LLMs in mind, which require a lot of storage space and data transfer speed.

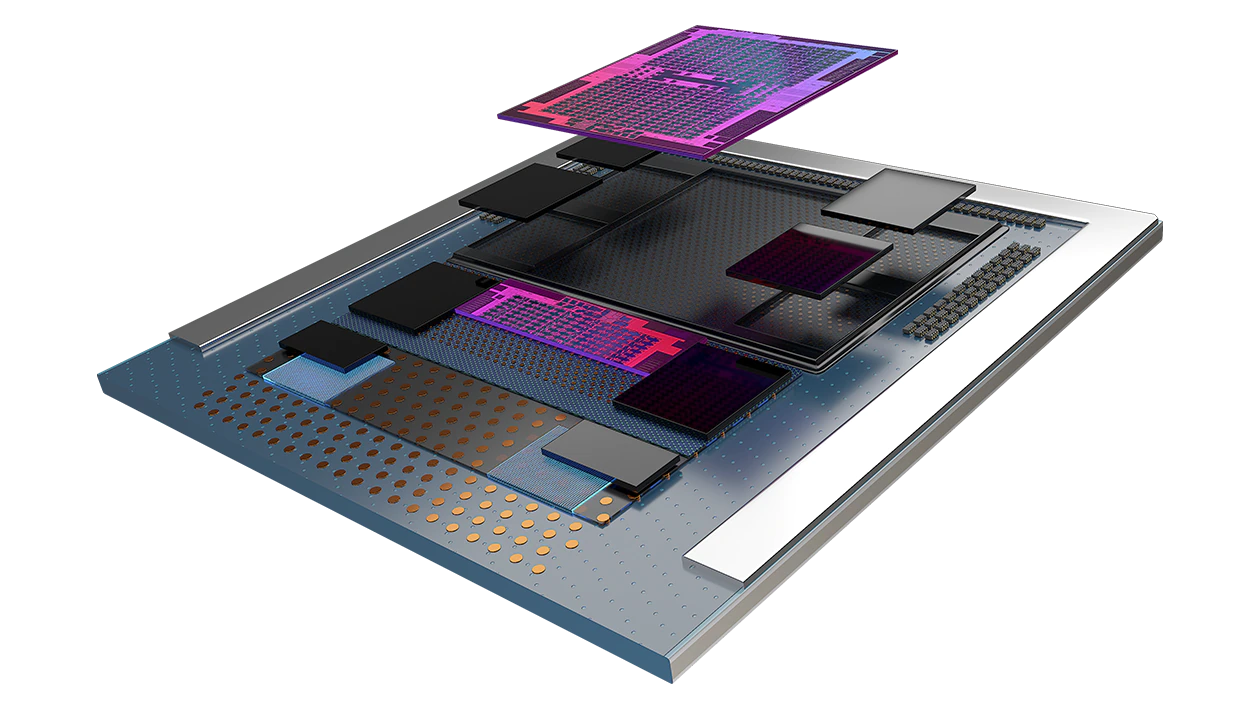

The MI300 series takes advantage of die-stacking technology, which allows for a great deal of customization. The MI300A, a data centre APU with integrated Zen 4 CPU cores and CDNA3 GPU cores, and the MI300X are both based on the same chiplet design. The MI300A is optimized for high-performance computing (HPC) and artificial intelligence (AI) applications because of its unified memory architecture and 128GB of HBM3 memory.

Hint Towards MI300’s Successor

AMD and its partners have previously dropped hints about the development of the MI400 series, a high-end data-center accelerator series presumably based on CDNA4 architecture and the successor to the MI300 series. The particular SKUs for the forthcoming MI400 series have not yet been released, however AMD CEO Dr. Lisa Su reaffirmed the company’s ambitions for the series during the recent Q2 results call.

When you look across those workloads and the investments that we’re making, not just today, but going forward with our next generation MI400 series and so on and so forth, we definitely believe that we have a very competitive and capable hardware roadmap. I think the discussion about AMD, frankly, has always been about the software roadmap, and we do see a bit of a change here on the software side.

AMD CEO Dr. Lisa Su

Following the MI300 and the introduction of the Zen CPU architecture, the MI400 will be the fifth generation of Instinct accelerators and will maintain the series’ separation from the Radeon brand. It will stick to the same pattern as the currently available and widely distributed Arcturus-based MI200 series and the forthcoming MI300 (project codenamed Aldebaran).

The MI400 has been rumored to implement the new XSwitch interconnect technology, which would give AMD an advantage against NVIDIA’s NVLink-based devices.

AMD Instinct Accelerators Specifications

| Year | Node | SKU | GPU CU Cores | CPU Cores | Memory (HBM) | TDP | |

|---|---|---|---|---|---|---|---|

| MI400 | 2024+ | CDNA4/Zen5 (?) | TBC | TBC | TBC | TBC | TBC |

| MI300 | 2023 | 5/6nm CDNA3/Zen4 | MI300A | 228 | 24 | 128 GB H3 | TBC |

| MI300X | 304 | – | 192 GB H3 | 750W | |||

| MI300C | – | 96 | 128 GB H3 | TBC | |||

| MI300P | 152 | – | 64 GB H3 | TBC | |||

| MI200 | 2022 | 6nm CDNA2 | MI250X | 220 | – | 128 GB H2e | 560W |

| MI250 | 208 | – | 128 GB H2e | 560W | |||

| MI210 | 104 | – | 64 GB H2e | 300W | |||

| MI100 | 2020 | 7nm CDNA1 | MI100 | 120 | – | 32 GB H2 | 300W |

| MI60 | 2018 | 7nm VEGA20 | MI60 | 64 | – | 32 GB H2 | 300W |

Source: SeekingAlpha